To avoid a complicated situation where you get a bill thousands of dollars than you expected in your cloud spend, your critical applications like EBS, ERP, SAP etc on a public cloud like AWS, Azure of Oracle Cloud Infrastructure (OCI), you will have to track the usage metrics, such as utilization, capacity, availability and performance. For example, to identify spending waste, it is fundamental to look at how much we’re using a resource and compare utilization with its provisioned capacity.

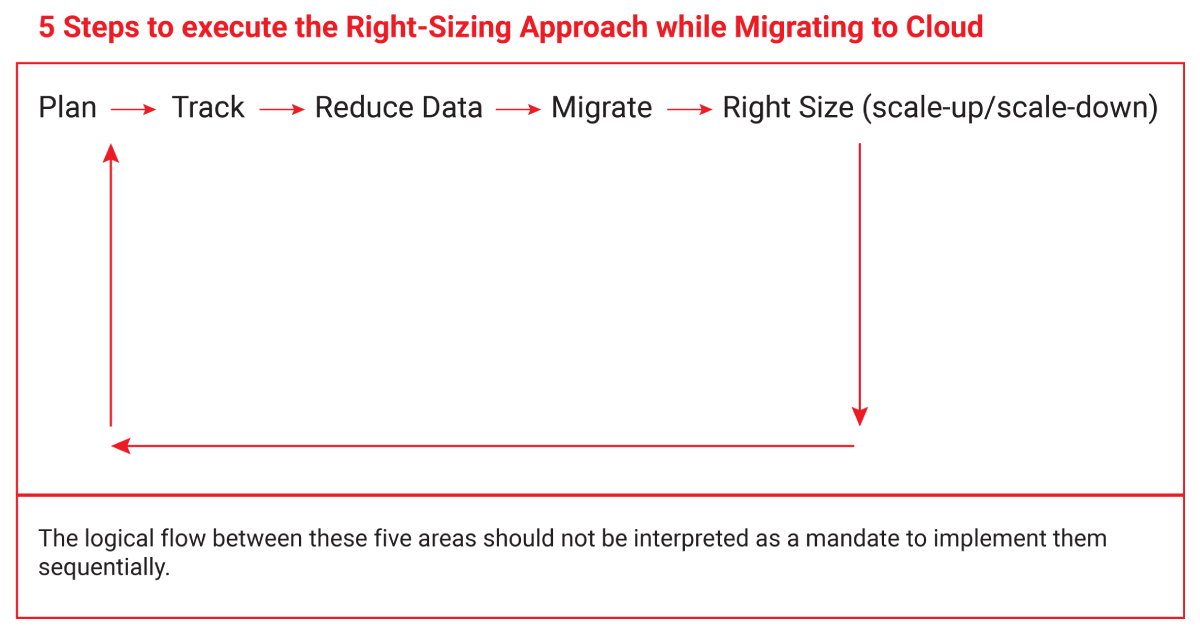

Furthermore, if we take actions to reduce spend by reducing the infrastructure footprint, we want to make sure we’re not impacting availability and performance. Here the role of Rightsizing cloud comes into play.

Cloud Infrastructure Optimization: Best Practices

- Turnoff idle instances not in use: Automatically shutdown or delete Compute virtual machine (VM) instances that are marked as idle with a simple and scalable server-less mechanism.

- Keep CPU usage to 55-65%: To achieve the best performance for your system, it is recommended to avoid oversizing CPUs. Instead, start with the minimum required CPU capacity and expand as needed. Optimal performance is typically achieved when CPU utilization averages between 55% to 65%, with occasional spikes up to 95%..

- Remember time of day is a key factor: During the workday, traffic gradually ramps up, requiring the resources of one to three additional machines, variable by day and time of day. Use Horizontal scaling accordingly.

- Practice elastic computing: Instantaneous pay-as-you-go access to VM allows you to design an elastic computing environment as per your needs.

- Understand what can and what can’t be right sized: Depending on the billing plans there maybe components which cannot be right sized, however this will necessitate a change to the appropriate cost models

- Leverage intelligent automation solutions / Auto Scaling: Automatically scale up or down the allocated compute, memory or networking as per the need of the business or pattern observed by the system.

- Review your cloud regularly with critical evaluation of spikes: Over cloud apps can handle scaling demands & unpredictable traffic spikes can make or break applications.

- CPU is not the only metric to monitor: Continuously observe metrics, policies and rules to govern them. Beyond CPU, you can monitor memory, and network bandwidth as well.

- Engage experts who can do this work with low-cost resources: Cloud specialists partners can be engaged to execute this task and continuously recommend best practices to optimize cost v performance.

- Share regular reports with LOB stakeholders on the performance vs costs: While LOBs are where these costs are apportioned, it’s important to sensitize them on the performance of their applications which will eventually build more mind share for future projects.

Details of LOB/ Operational needs should be a key consideration while following these best practices for cloud optimization instances. It is important to critically assess performance characteristics of each instance, risks and application viability assessments along with standardizing policies for reviews and tracking.

Leverage ITC’s Oracle cloud certified experts for accurate infrastructure visibility capabilities, to baseline application workloads and throughput before migration to assist in the initial sizing. We help you gain clear visibility into workload patterns such as what “normal” looks like, peaks height and frequency on the cloud.

Pre & post migration (whether with load testing or real customers in production), resource utilization and delivered experience and throughput, is collected to adjust the architecture you need on OCI. Timelines can be configured to focus on a specific test cycle or peak period.

5 Key Considerations for Cloud Optimization

- Rightsizing for peaks: Determine your workload demand by considering the peaks that occur during your observation period and not the average utilization. You don’t want to end up in a situation when your resized instances are not able to handle peak workload anymore. If you experience peaks that are much higher than the average, consider serving such peaks by scaling out and distributing workload across multiple smaller resources.

- Assessing constraints: As you change your allocation size, the new size may be subject to constraints that you need to be aware of. Select only allocation sizes that are compatible with your workload requirements. For example, compute instance flavors are provided with a number of CPUs and an amount of RAM, but also network and storage bandwidth. If you are using only half the CPU but the entire storage bandwidth, sizing down an instance may negatively impact its storage performance. Similarly, if you’re running a 64-bit operating system, you can’t select a 32-bit instance, even if this is cheaper and can still deliver the performance you need.

- Mitigating availability risk: Some services like compute instances require a disruptive operation (a reboot) to change size. Conversely, application PaaS services have options for zero-downtime upgrades so that incoming requests aren’t dropped. For example, Oracle Application Container Cloud can be configured to do zero-downtime updates via subscribing to Automatic Kubernetes upgrades updates. For those services requiring disruptive operations, factor in availability risks and mitigate them by executing rightsizing only during maintenance windows and limiting rightsizing activities to once a week or once a month.

- Mitigating performance risk: As you change your allocation size, the new size may not be able to deliver enough performance to serve your workload demand. Mitigate performance risk by inspecting application metrics from application performance management (APM) tools. Alternatively, rightsize in multiple steps and measure the performance impact at each step. Implement continuous rightsizing and be ready to size up as you detect performance issues.

- Starting with the top wasters: If you find a large number of rightsizing opportunities, start with resources that have the highest costs and lowest utilization. Calculate a ratio between the two metrics values and order the identified overprovisioned resources based on that ratio in descending order. Tackle the list from the top down.

Now to optimize capacity management for your cloud services, several factors need consideration. It’s important to determine workload demands based on peak periods rather than average utilization, to ensure instances can handle the workload. You should also assess any constraints related to your workload requirements, such as CPU, RAM, network, and storage bandwidth. Mitigating availability and performance risks are also crucial in rightsizing cloud services. Start by targeting the most expensive and underutilized resources to achieve savings.

Continuous rightsizing is gaining importance as serverless capabilities gain prominence amongst cloud providers. For instance, Oracle Autonomous database services utilize continuous rightsizing to dynamically scale services based on demand, eliminating the need for clients to worry about capacity management and unlocking cost benefits for dynamic workloads.